How to Run Open WebUI Locally on Your Mac: A Step-by-Step Guide

Run Open WebUI on MacBook Air M2 easily. Follow my guide for setup and automation to get ChatGPT-like responses locally.

If you're interested in running LLMs locally on your Apple Mac, you may have experienced challenges with various desktop tools like msty, gpt4all, etc. I encountered similar issues on my MacBook Air M2 with 8 GB RAM—none of these tools provided satisfactory responses. Whether it was the memory limitations, model selections, or prompts I used, the results were underwhelming.

# Introduction to Open WebUI

When Simon Willison shared his success with running Open WebUI using uvx, I decided to give it a try.

Though I'm still getting accustomed to it, here's how I successfully installed Open WebUI. The steps generally go like this:

- Install

uvx - Initialize a Python virtual environment

- Install Open WebUI

- Run the application

For those who prefer automation, you can add a script to your shell. Here's a detailed breakdown:

# Step-by-Step Installation Guide

# Install uvx

brew install uv

# Set Up the Environment

mkdir openwebui && cd openwebui

uv init --python=3.11 .

uv venv

source .venv/bin/activate

# Install Open WebUI

uv pip install open-webui

# Run Open WebUI

open-webui serve

Open WebUI is now accessible via: http://0.0.0.0:8080/.

# Automate the Startup with a Script

Typing multiple commands to start Open WebUI can be cumbersome, so I asked Claude for a solution to automate the process while also logging outputs.

I have open web ui installed via pip in a virtual environment. I invoke it via

source .venv/bin/activateand runopen-webui serve; I want to automate this whenver I open the shell. And pipe the log to a file. How can I do this? I should also be able to stop web-ui

Here's the script it provided:

#!/bin/bash

# Function to start OpenWebUI

startwebui() {

if pgrep -f "open-webui serve" > /dev/null; then

echo "OpenWebUI is already running"

return 1

fi

(source "$HOME/openwebui/.venv/bin/activate" &&

open-webui serve > "$HOME/logs/webui.log" 2>&1 &)

echo "OpenWebUI started. Logs at ~/logs/webui.log"

}

# Function to stop OpenWebUI

stopwebui() {

pkill -f "open-webui serve"

echo "OpenWebUI stopped"

}

# Instructions to Implement the Script

-

Save the script as an executable file:

chmod +x ~/scripts/webui-control.sh -

Add the script to your shell configuration file (e.g.,

~/.zshrc):source ~/scripts/webui-control.sh

# Start Open WebUI Easily

Now, whenever you want to start Open WebUI, simply type startwebui in your terminal, and visit http://0.0.0.0:8080/ to enjoy locally running ChatGPT.

# Selecting models to play with

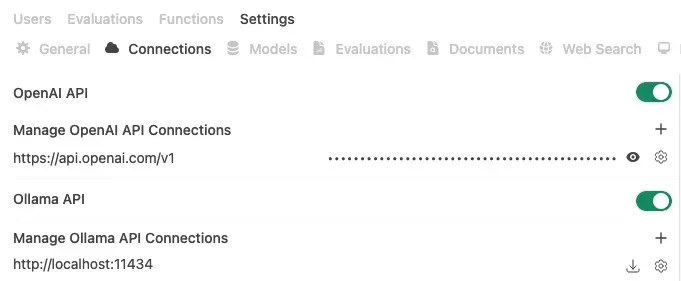

You have two options to select models to work with OpenWebUI. I'm using the OpenAI API key to run chatGPT & GPT4-o models.

# 1. OpenAI API

Go to OpenAI API Key Settings and register a new API Key. If you don't have an OpenAI account, you've to register first.

# 2. Locally running models with Ollama

Another option is to run Ollama locally.

# Enjoy locally running LLMs

I encourage you to try running Open WebUI locally and experience the benefits firsthand.

Got comments or questions? Share them here on x or on Bluesky.

Under: #aieconomy , #tech , #code